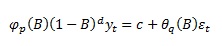

This can be rewritten to a multivariate generalization of the Augmented Dickey-Fuller test:

This is the "transitory" form (because it includes Xt-1, as opposed to "long-run" form which includes Xt-p) therefore it holds that:

The rank r of the (n x n) matrix Π determines number of cointegration vectors (0≤r<n). If r=0 then there is no cointegration. Matrix Π (impact matrix) can be rewritten to product of (n x r) matrix of adjustment parameters α and (r x n) matrix of cointegrating vectors β’.

For example, having two time series the VAR(1) and VECM(1) model become:

To find out r (number of cointegrating vectors), we can perform the “trace” test to test for:

H0: There are r (linearly-independent) cointegrating vectors (and n-r common stochastic trends)

H1: There are more than r cointegrating vectors

We usually start with r=0, perform the test and in case of rejection we increase r by 1 and test again, until the hypothesis is not rejected.

An example in R code (taken from Bernhard Pfaff: Tutorial - Analysis of Integrated and Cointegrated Time Series) :

set.seed(12345)

e1 <- rnorm(250, 0, 0.5)

e2 <- rnorm(250, 0, 0.5)

e3 <- rnorm(250, 0, 0.5)

u1.ar1 <- arima.sim(model = list(ar=0.75), innov = e1, n = 250)

u2.ar1 <- arima.sim(model = list(ar=0.3), innov = e2, n = 250)

y3 <- cumsum(e3)

y1 <- 0.8 * y3 + u1.ar1

y2 <- -0.3 * y3 + u2.ar1

ymax <- max(c(y1, y2, y3))

ymin <- min(c(y1, y2, y3))

plot(y1, ylab = "", xlab = "", ylim = c(ymin, ymax))

lines(y2, col = "red")

lines(y3, col = "blue")

y.mat <- data.frame(y1, y2, y3)

vecm1 <- ca.jo(y.mat, type = "eigen", spec = "transitory")

vecm2 <- ca.jo(y.mat, type = "trace", spec = "transitory")

vecm.r2 <- cajorls(vecm1, r = 2)

vecm.level <- vec2var(vecm1, r = 2)

vecm.pred <- predict(vecm.level,n.ahead = 10)

fanchart(vecm.pred)

vecm.irf <- irf(vecm.level, impulse = 'y3',response = 'y1', boot = FALSE)

vecm.fevd <- fevd(vecm.level)

vecm.norm <- normality.test(vecm.level)

vecm.arch <- arch.test(vecm.level)

vecm.serial <- serial.test(vecm.level)